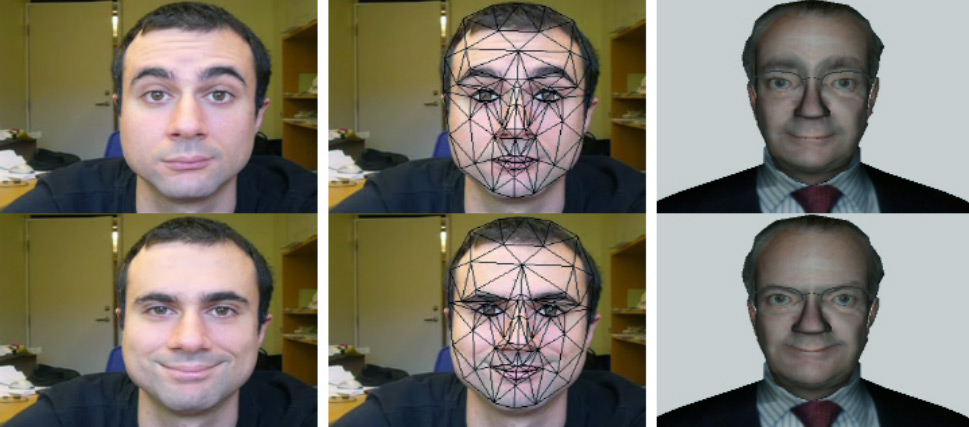

A Non-Invasive Approach for Driving Virtual Talking Heads from Real Facial Movements

Abstract

In this paper, we depict a system to accurately control the facial animation of synthetic virtual heads from the movements of a real person. Such movements are tracked using active appearance models from videos acquired using a cheap webcam. Tracked motion is then encoded by employing the widely used MPEG-4 facial and body animation standard. Each animation frame is thus expressed by a compact subset of facial animation parameters (FAPs) defined by the standard. We precompute, for each FAP, the corresponding facial configuration of the virtual head to animate through an accurate anatomical simulation. By linearly interpolating, frame by frame, the facial configurations corresponding to the FAPs, we obtain the animation of the virtual head in an easy and straightforward way.